The EU AI Act: Navigating the Future of Artificial Intelligence

Navigating the Future of Artificial Intelligence

Impact of AI on the Financial Sector

Data Privacy Concerns

AI relies on vast amounts of personal data. Their misuse can lead to violations of the right to the protection of personal data and to the imposition of hefty supervisory penalties on AI providers.

Model Risk

AI models, especially those that are complex and opaque, might produce unintended or unexplainable results. If not properly validated or understood, these models can lead to significant financial errors.

Algorithmic Bias and AI- Automated Discrimination

If the data used to train AI models contain biased human decisions, the models can perpetuate or even exacerbate those biases, leading to unfair or discriminatory outcomes, especially in areas like creditworthiness assessments or insurance underwriting.

Cybersecurity Vulnerabilities

AI systems can become targets for malicious threat actors. For example, attackers might use adversarial inputs to deceive AI models thereby causing them to make erroneous decisions.

Over-reliance on Automation

An undue dependence on AI-driven automation might lead to human operators being out of the loop, potentially missing out on context or nuances that machines might overlook.

Job Displacement

As AI automates more tasks, there is a concern about job losses within the financial sector, especially in roles that involve repetitive tasks.

Regulatory and Compliance Risks

As AI becomes more integral to the financial sector, regulatory bodies around the world are stepping up their oversight. Institutions may face challenges in ensuring their AI-driven processes comply with evolving regulations.

Operational Risks

System outages, AI model failures, or other malfunctions can disrupt services, potentially leading to financial losses and reputational damage.

Reputational Risks

If a financial institution’s AI system causes a high-profile error or is involved in an activity deemed unethical (e.g., biased decision-making), the institution’s reputation could be harmed.

EU AI Act: Overview

As the momentum of artificial intelligence (AI) accelerates, regulatory efforts are intensifying in parallel. Jurisdictions worldwide, including the USA, Singapore, China and the EU, are striving to craft their distinct regulatory frameworks to address their specific concerns, potentially resulting in a splintered global digital landscape and increase in complexity.

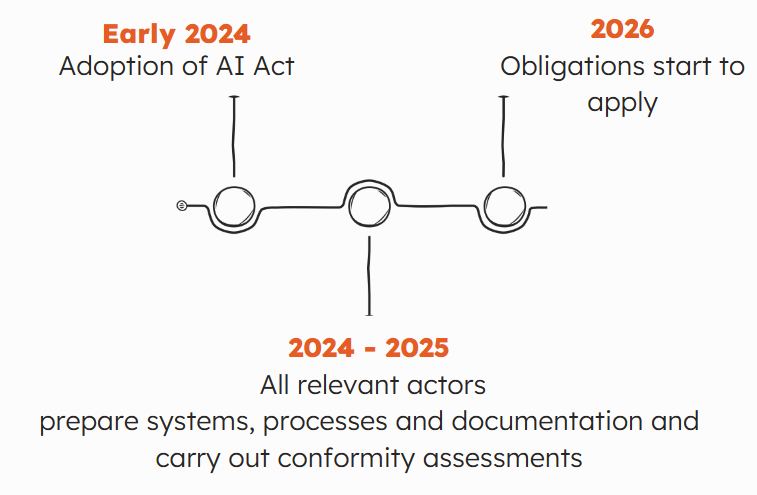

The EU Artificial Intelligence Act (EU AI Act) was proposed by the European Commission in April 2021 and is the first of its kind to propose horizontal regulation for AI across the EU, focusing on the utilization of AI systems and the associated risks. A political agreement was reached in December 2023. It is thus expected that the legislative procedure will be concluded in the beginning of 2024.

Forbidden applications are reported to include biometric categorization around sensitive characteristics, facial recognition systems (with exceptions and subject to safeguards), emotion recognition in the workplace or education, certain types of predictive policing and social scoring based on behavioral or personal characteristics.

Regarding the governance of foundation models, the initial agreement mandates that such models adhere to specific transparency requirements prior market entry. A more rigorous regulatory framework has been implemented for ‘high impact’ foundation models. These models are characterized by their training with extensive datasets and their advanced level of complexity, capabilities, and performance, which significantly exceed the norm.

With respect to ‘general purpose AI’ (such as ChatGTP), the AI Act will impose binding obligations related to managing risks and monitoring serious incidents, performing model evaluation and adversarial testing.

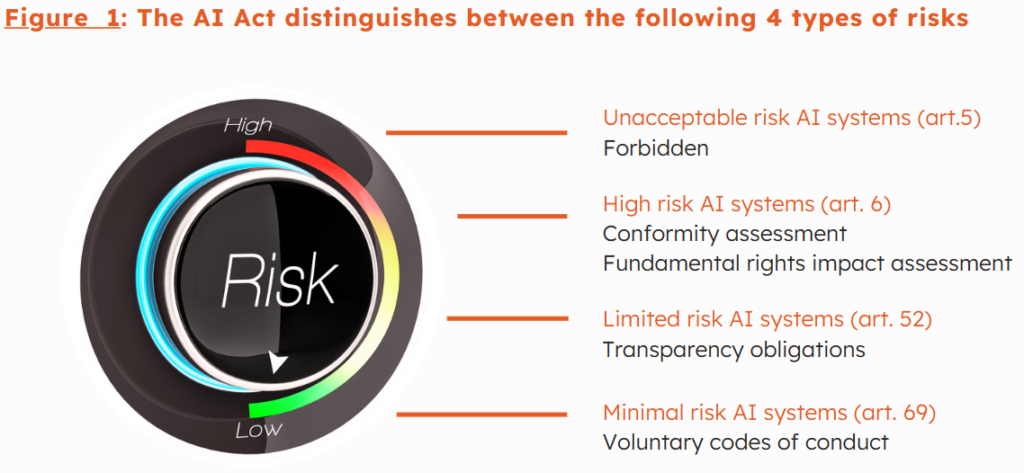

The different AI models are to be classified according to their risk profile.

Areas of Complexity

The primary challenges for financial institutions in implementing the EU AI Act revolve around compliance and adaptation. As they often employ complex and advanced AI systems for various operations like risk assessment, fraud detection, and customer service, adhering to the Act’s stringent requirements necessitates not only a thorough understanding of the legislation’s requirements, but also a review and potential overhaul of financial institutions’ systems, to ensure they meet the new standards, especially around transparency, data governance, and bias mitigation. This process not only requires substantial investment in technology and expertise but also demands ongoing vigilance to keep pace with evolving AI technologies and regulatory updates, posing significant operational and financial burdens on financial institutions.

Below are important issues that financial institutions must look out for in 2024:

Complexity in the Act’s Material Scope

Financial institutions must assess whether their AI-based systems would be classified as as ‘high’, ‘limited’ or ‘minimal’ risk. Examples of high-risk AI systems in the financial sector would be credit scoring models, as these can potentially influence a person’s access to financial resources. In the insurance sector, systems used for the automated processing of insurance claims would likely be classified as high-risk too.

Conformity Assessment

During the grace period, financial institutions making use of high-risk AI systems will be required to demonstrate that the obligations imposed by the Act on high-risk AI systems have been complied with and to carry out a fundamental rights impact assessment. Ensuring that all these requirements and principles are translated into practical arrangements is a complex task.

Cultivating an Ethical Corporate Culture through Training

On top of performing a conformity assessment, financial institutions must – more generally – also ensure that they raise awareness by ensuring that their employees proper training on the ethical use of AI systems, by establishing formal governance arrangements, by assigning clear responsibility across their different departments, and by engaging with the public and other stakeholders to build trust and ensure explainability and transparency in AI operations

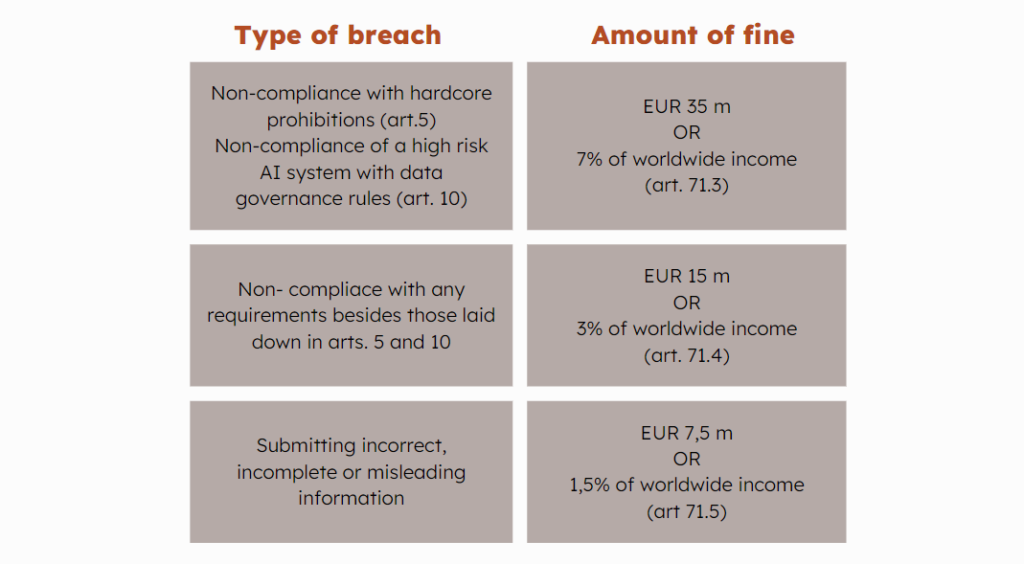

Consequences For Non-Compliance

Important Deadlines

T3 Services

This publication has been prepared for general guidance on matters of interest only, and does not constitute professional advice. You should not act upon the information contained in this publication without obtaining specific professional advice. No representation or warranty (express or implied) is given as to the accuracy or completeness of the information contained in this publication, and, to the extent permitted by law, T3 Consultants Ltd, its members, employees and agents do not accept or assume any liability, responsibility or duty of care for any consequences of you or anyone else acting, or refraining to act, in reliance on the information contained in this publication or for any decision based on it.