Implementing AI Risk Management for Secure Systems

Introduction

With the ever-changing technology landscape, AI risk management has now emerged as a fundamental issue for both businesses and individuals. The need for secure systems that utilize AI is paramount as artificial intelligence becomes more embedded across industries.

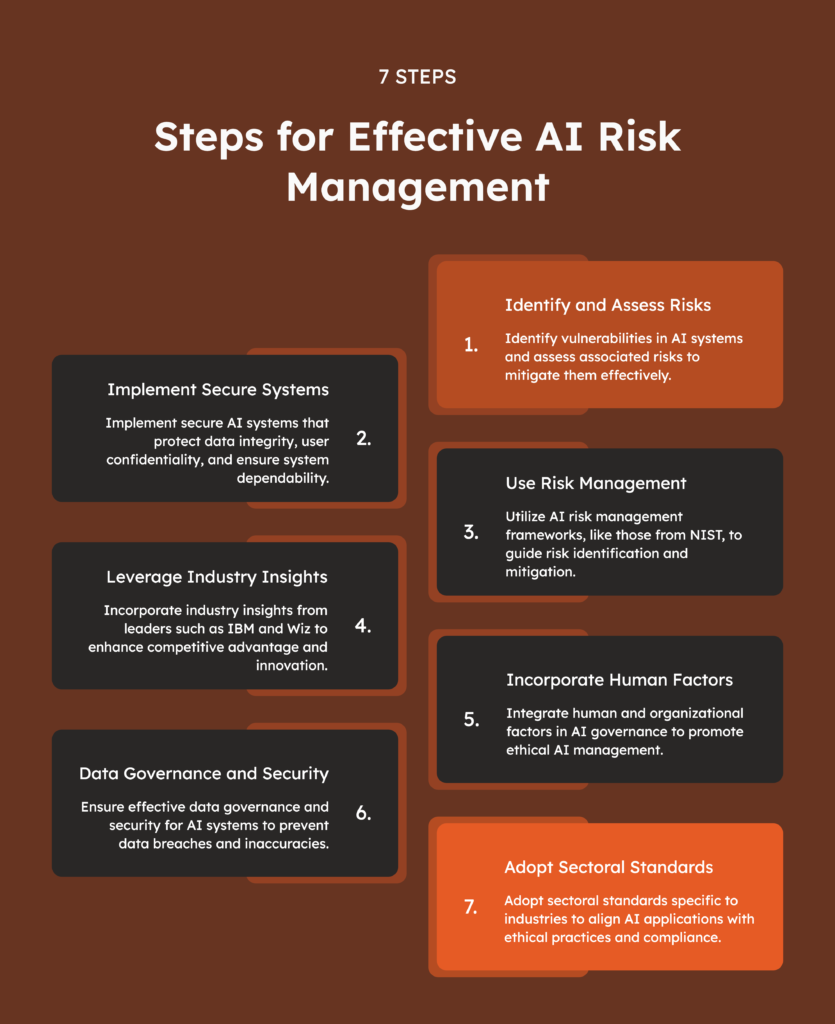

Such secure AI systems act to maintain data integrity, preserve user confidentiality, and guarantee system dependability. Fundamentally, AI risk management consists of identifying vulnerabilities in AI technologies, evaluating the associated risks, and deploying mechanisms to manage those risks effectively. Secure systems underpin this process, offering robust countermeasures against unauthorized access and data leakage.

As organizations increasingly adopt AI-powered solutions, the knowledge of how to manage these risks is critical to sustaining a competitive advantage and earning stakeholders’ trust. Securing and ensuring the dependability of AI systems is critical to promoting innovation while also preventing potential harm within the digital sphere.

Overview of AI Risk Management Frameworks

Businesses are increasingly relying on AI to run their operations. Therefore, understanding AI risk management frameworks is fundamental for organizations looking to manage risks while maximizing the value of AI systems.

AI risk management frameworks are organized processes for recognizing, evaluating, and addressing risks that come with deploying AI technologies. These frameworks guide companies to overcome challenges such as data privacy issues, bias, and unintended consequences that may emerge from the adoption of AI tools.

One of the key sets of AI risk management frameworks is produced by the National Institute of Standards and Technology (NIST). NIST is an authoritative body that develops official standards and guidelines for ensuring responsible AI system development. NIST’s AI Risk Management Framework works to foster trust in AI applications by encouraging transparency, traceability, and auditability.

NIST offers detailed guidance to help companies evaluate and mitigate risks associated with AI and adhere to the highest standards. A major benefit of NIST’s role is that the AI risk management frameworks it produces are thorough and broadly accepted, cutting across sectors.

By creating assessment and management criteria for AI systems, NIST helps companies establish robust practices with safety and ethical implications at the forefront. This input builds confidence among industrial participants and supports the responsible evolution of AI technology.

Apart from NIST’s frameworks, different sector-specific standards and guidelines for AI risk management have been released. These frameworks often supplement NIST’s methodologies, delivering a full set of tools to tackle sector-specific risks. Implementing a strong AI risk management framework not only protects against risks but also enables organizations to fully utilize the many opportunities that AI brings.

Staying abreast of new standards, guidelines, and best practices allows companies to effectively manage the complexities of AI risk and implement AI systems responsibly.

Industry Insights and Practical Implementations

With the rapidly changing technology and market environment, the knowledge of industry insights and their effective practical implementations are important to maintain competitiveness. The case studies of how industry leaders, such as IBM and Wiz, apply practical implementations to innovate and manage risks, especially in artificial intelligence, are instructive.

To What Extent Do Industry Leaders Practice What They Preach

Industry leaders, such as IBM, have traditionally been the drivers of technological innovation with a powerful research and development arm that leads to practical implementations that redefine industries. For example, IBM’s vision of AI goes beyond basic automation to developing AI systems that can learn and better themselves over time. IBM’s focus is not only on efficient and economic AI solutions but also on transparent and ethical AI, in response to the rising concerns of AI biases and decision-making.

Meanwhile, Wiz, an emerging player in the cloud security space, has made a name for itself by being a disruptor in the industry for its ability to quickly implement practical solutions. Wiz distinguishes itself with its innovation in strong security measures to protect sensitive information within cloud environments. With practical implementations that put user security as a priority, Wiz provides invaluable industry insights to help businesses protect their digital assets.

Industry Insights on AI Risk Management

The fast pace of AI innovation introduces new risks. An industry leader in sharing insights on how to deal with these new risks is IBM, which reiterates the importance for businesses to recognize AI risks, such as biases in algorithmic decision-making and data privacy issues.

Through their industry insights, IBM tells businesses why risk management needs to form an integral part of the AI development process, not only to prevent potential risks but also to assure that their AI systems are responsibly used. On the same note, Wiz contributes to this discussion by tackling AI risks through the lens of a cybersecurity expert.

Their industry insights highlight the importance for businesses to secure AI systems against potential risks. By taking a proactive approach to risk management, companies can stave off cyber attacks and protect their investments into AI.

This reinforces the idea that a deep knowledge of AI systems and their risks is crucial for successful practical implementations of AI systems. In summary, the practical implementations by industry leaders like IBM and Wiz provide a wealth of industry insights for managing the risks related to AI. Through robust, responsible, and secure implementations, these industry leaders set the standards.

Understanding and incorporating risk management are fundamental to sustaining a competitive edge in the fast-moving technological space. For businesses that seek to adopt AI, learning from industry pioneers is vital to facing the imminent risks and prospects.

Leveraging Human and Organizational Factors in AI Risk Management

As we navigate the new frontiers of artificial intelligence (AI), robust risk management becomes essential to positive societal outcomes from the technology. Humans and organizations play a key role in shaping AI governance and minimizing AI-related risks. This highlights the need for a holistic approach that encompasses not only the technological domain but also the human-centric and organizational domains.

Human factors involve cognitive abilities, limitations, and interactions of individuals who develop or use AI, influencing how decisions are made, risks are perceived, and errors are managed within AI systems. Organizational factors, on the other hand, cover the culture, structure, policies, and practices of a business entity that determine how AI technologies are deployed and utilized. Both these factors form a key part of an all-encompassing AI risk management approach.

How can human and organizational factors be considered in AI governance? Here are some proposed good practices:

- Transparent and Accountable Cultures: Cultivate organizational cultures that ensure AI is managed ethically and in alignment with societal values. This includes laying down clear principles and sturdy objectives, and engaging stakeholders across all levels in decision-making processes.

- Training and Lifelong Learning: Educate teams about the capabilities and limitations of AI to empower companies to be more anticipatory of risks and effective in managing risk mitigation.

- Cross-Disciplinary Collaboration: Encourage collaboration inclusive of experts from different domains, which may refine AI applications and enhance risk appraisal.

- Audit and Feedback Loops: Establish processes to pinpoint instances of deviations and potential weaknesses in AI systems. Emphasizing human judgment and organizational backing strengthens risk management frameworks and advances responsible and sustainable AI technologies.

Data Governance and Security in AI: An In-Depth Perspective

As technologies in artificial intelligence continue to advance at a rapid rate, the importance of managing and securing data remains paramount in ensuring the effective and secure implementation of AI systems.

Data governance goes beyond merely organizing and managing data; it is a fundamental aspect of risk management in AI. AI systems rely on vast amounts of data that need to be meticulously organized and managed to mitigate the risks of data breaches and inaccuracies.

- Data Governance: This involves the effective coordination of data collection, processing, storage, and retrieval. Ensuring a smooth flow of data is essential to prevent bottlenecks that might result in delays or errors.

- Security Enhancements: Incorporating strong security protocols protects both the AI models themselves and the data they operate on from unauthorized access and cyber threats. Cybersecurity tools, such as encryption, authentication, and access controls, protect sensitive information and preserve the integrity of AI output.

Strong security measures support compliance with data regulations and cultivate user trust. With AI systems increasingly integrated into business operations and decisions, the protection of these systems from cyber threats becomes an urgent business issue.

In conclusion, efficient data management and heightened security are essential components guiding the deployment and operational success of AI systems. Emphasis on data governance and security will be essential for unlocking the full capabilities of AI.

Sectoral Standards for the Risk of AI

In the expanding landscape of AI applications across industry sectors, well-defined sectoral standards become increasingly important. These standards are essential for AI risk management, ensuring that applications meet ethical practices and build stakeholder trust.

Sectoral standards account for different requirements and challenges by sector, such as:

- Healthcare: Stringent confidentiality requirements for patient data.

- Financial Services: Ensuring defensible data protection against abuse or violations.

Sectoral standards provide a framework to address potential biases, ensure data integrity, and keep up-to-date with advancing AI technologies.

At the national and international levels, these standards align with base pieces such as ISO/IEC 22989, ensuring coherence across sectors. A tailor-made approach helps leverage new AI technology sustainably and responsibly.

Conclusion

Deploying a sound AI Risk Management Framework is imperative for entities aiming to uphold secure systems in today’s digital era. Through risk identification, continuous monitoring, and adaptive controls, entities ensure secure and operational processes. A comprehensive framework proactively manages threats, promoting long-term development and responsible use of AI across industries.