Responsible AI

AI Risk ManagementManagement

Contact us

Ensuring Responsible and Trustworthy AI

Artificial Intelligence (AI) presents significant opportunities for business growth and innovation, societal improvement, and human flourishing, but also introduces unique risks such as algorithmic bias, opaque decision-making, personalized misinformation at scale, privacy issues, and cybersecurity threats. Effective AI risk management, aligned with established frameworks like the NIST AI Risk Management Framework (NIST AI RMF), OECD AI Principles, EU AI Act, and ISO 42001, enables organizations to proactively identify, assess, and mitigate these risks. Implementing robust AI governance best practices not only fosters trust and regulatory compliance but also ensures the increased adoption of AI for societal gain and the responsible, secure, and ethical utilization of AI technologies.

Responsible AI: Building Trustworthy AI Systems That Align with Your Values

AI Risk Management is not just a framework, it’s a critical, forward-looking strategy that integrates operational excellence, organizational resilience, and ethical assessment to mitigate potential harms while amplifying the benefits of artificial intelligence. With AI reshaping industries risk management has become essential to ensure innovation thrives within safe and responsible boundaries.

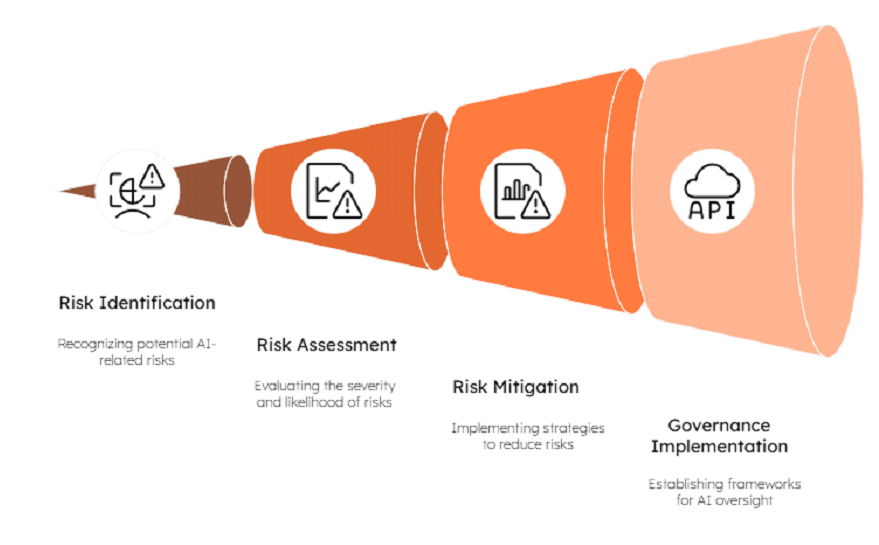

The key themes underpinning AI Risk Management:

Navigating the increasingly fragmented web of standards, frameworks and regulations, while distinguishing media-hyped fiction from fact, can feel overwhelming to many business leaders. At T3, we recommend identifying and aligning with a limited set of robust and expert-supported frameworks that will ensure compliance, strengthen trust with stakeholders, and reduce overhead (specifically we recommend, and anchor our own work, in the EU AI Act, ISO 42001, and the NIST AI Risk Management Framework).

To mitigate, manage, and prevent potential harms and violations to your business, users/ consumers, and society more broadly, you need to proactively, diligently and regularly identify, understand and address risks. In the context of AI and risk management, this means everything from detecting biases in algorithms to assessing ethical dilemmas and ensuring reliability, a well-designed and implemented AI risk assessment program safeguards against unforeseen challenges and reputational risks (although you can’t anticipate and prevent all risks – be wary of people who claim you can – robust risk management across the lifecycle, with well-defined, staffed and system-supported issue spotting and error management can prevent most unintended and harmful AI issues).

Thorough risk management requires the implementation of varied and scalable controls at intervention points across the lifecycle. Risk mitigation can take many forms, from clear and actively enforced acceptable use policies to technical solutions, from contractual obligations with your partners, to user transparency and controls. Given resource-constraints within most organizations, and the still emerging best practices with which to prevent AI risks, addressing AI risks through expert AI risk assessment consulting should be proportional to the potential impacts, and within established bounds of your risk appetite. You can embed safeguards into system design, adopt cutting-edge technologies, and set up rigorous protocols to ensure risk remains at acceptable levels.

Clear roles, responsibilities, and robust oversight provide the foundation for ethical AI development and deployment, fostering confidence among teams and external stakeholders alike. Comprehensive governance and oversight in the context of AI and risk management should be a shared responsibility across the whole organization, with individual functions, not just centralized risk or compliance teams, accountable to the responsible development, deployment, and usage of AI at all times. This involves defining not just clear governance processes and structures, but providing ongoing education and driving organizational culture change and resilience.

AI systems are often opaque. We are learning every day how they work in new and novel situations, while still struggling to understand exactly how they make decisions and how to control their outputs in scalable, and human-centered ways. At the same time, AI systems evolve and so do their risks. Regular evaluations enable institutions to anticipate new challenges, adapt to evolving situations, and continually enhance the effectiveness of their AI risk management strategies.

By weaving these AI risk management pillars into their operations, organizations not only protect themselves from potential pitfalls but also position AI as a trustworthy driver of progress. In the rapidly evolving AI landscape, managing risks isn’t just an obligation, it’s an opportunity to lead responsibly and innovate boldly.

AI Risk Management

Ensuring ResponsibleResponsible and Trustworthy AI

Why managing AI Risks is important

Increasingly, AI risk management is viewed through a narrow lens, seen only as a compliance checkbox or an unwelcome cost that stifles innovation. This perspective is not only outdated and hyperbolic but also shortsighted.

Embracing robust AI risk management, supported by comprehensive AI risk assessment services, is not about erecting barriers; it’s about laying a resilient foundation for trust, fostering sustainable innovation, and building a more successful and enduring business.

If people don’t trust AI, they won’t use it, and as employees they won’t engage with it, thus limiting the potential positive benefits on people, businesses, and society more broadly.

As our T3 partner Jen Gennai always says “responsible AI *is* successful AI”.

AI Risk Management Process

AI Risk Management: From barrier to enabler

At T3 our stance is clear: AI risk management isn’t a compliance checkbox or innovation killer. It’s the foundation that makes meaningful innovation possible. Through our AI risk management consulting services, we help organizations take deliberate steps to ensure technology works as intended – not to limit possibilities, but to open them up responsibly.

Companies that treat risk as an afterthought inevitably face reputation damage, lost customer trust, and regulatory problems. Those who build responsibility into the development process from day one make better strategic decisions that protect operations and strengthen their reputation. Speed matters, but speed without responsibility is a recipe for ruin.

Trust in AI will break, and many companies will not recover

Usefulness, accuracy, consistency and transparency build confidence, but earning trust means more than building reliable systems. It requires showing your work. Transparency and accountability matter. This means explaining what models are doing in plain language (e.g through model cards, or data sheets), or providing an overview of what systems goals and limiations are (e.g through systems cards or transparency reports) and demonstrating responsible AI commitments through ongoing action and iteration. AI will cause errors, issues and harms, some catastrophic. It’s how companies prepare and respond to potential issues and take responsibility that make a difference. Once lost, trust is almost impossible to regain. But when maintained, it creates a virtuous cycle of adoption, improvement, and loyalty.

Competitive Advantage Through Responsibility

AI Risk management and responsible AI is not just about playing defense. Companies that embed responsible AI principles are creating lasting competitive advantage. Data shows that being invested in responsible AI and ethical business practices results in talent recruitment and loyalty, consumer preference, investor confidence, and ultimately better products.

As AI capabilities become more widely available through APIs and open-source models, how you implement AI, whether aligned with human values, transparent, and dependable, becomes your true differentiator. Organizations using responsible AI approaches report improved data privacy, enhanced customer experiences, more confident business decisions, and stronger brand reputation. And at T3, we have the expertise and experience in AI risk assessment consulting to help you become a responsible AI industry leader.

Understanding key AI Risks

AI Bias

AI systems learn from data, and when that data reflects historical biases, the AI can reflect, perpetuate and even amplify these biases. Although many AI technologists acknowledge the risks of AI bias, they distill their responses to simplistic solutions of analyzing their datasets for bias, and increasing representation of training sets, or using automated systems for testing for bias in their model, and output.

However, because bias is an inherently complex human condition and can creep in at any stage of the AI lifecycle, to properly address AI bias, we also have to think about the humans who play a role in AI’s design, labelling and deployment:

- who built, labelled, and sourced the data, and how could their biases be embedded in the data and flow through the model?

- who makes decisions. How, and by whom, are they informed, or what biases may they bring to the table

- are there sufficiently multidisciplinary teams with different lived experiences to bring new types of thinking and drive better outcomes across the AI lifecycle?

- inclusive of both employees and your consumers, who is able to provide feedback and how? Who listens to, and decides what feedback is acted upon?

- what training, accountability, and governance have you implemented to ensure employees are equipped to identify and address potential harmful biases?

- Who defined the policies of what is acceptable or not, and how did they consider different subgroups or cultural considerations?

Inclusive AI ensures greater market share, prevents harm, and bolsters an organization’s reputation as a responsible and trustworthy industry player.

“Black box” algorithms and unexplainable AI outcomes

In order to effectively debug AI algorithms, or to understand if outcomes are fair and accurate, organizations need to ensure that their AI systems are explainable to users, and interpretable by their engineers. With the EU AI Act, and other global regulations, transparency and auditability are also required by regulators and policymakers to ensure that AI systems are working as intended and not causing harm.

However, some of these conversations veer into excessive requests for “full transparency”. Access to algorithms and underlying systems may sound like a silver bullet to understanding AI but it comes with its own risks:

- User distrust: given AI is such a complex technology, lay people, whether end-users, consumers or policymakers, could become overwhelmed by full transparency. Frustration and lack of understanding could increase fear and actually lead to greater distrust of AI.

- Security Risk of revealing vulnerabilities that malicious actors could exploit or enabling reverse engineering for competitors or bad actors. This could lead to worse outcomes and less protection for end-users, negating some of the key reasons in calling for transparency

- Oversimplification could cause misunderstanding and not answer important questions around being able to contest decisions, debug issues, identify errors, or appropriately audit/control a system.

- Legal and regulatory challenges: Transparency may conflict with intellectual property protection or raise privacy concerns if it involves revealing sensitive data used in training.

Instead of focusing on transparent AI, we should continue investing in AI interpretability, explainability, auditability, and contestability which are more likely to lead to understanding, and trust. We should also be more diligent in holding those developing and deploying AI accountable to providing concise, clear and accessible AI artifacts (e.g. model cards, datasheets, transparency reports etc.), and opening their systems to trusted auditors and bug bounties.

Privacy & Security

- Privacy: AI systems, particularly those in financial services, often process vast quantities of personal and sensitive data. The risks here involve not only the potential for data breaches due to security flaws but also the misuse of data, even if unintentional. AI can enhance the capabilities for data breaches, enable deepfakes for fraudulent activities, expand the scale of unauthorized collection and use of private data, and re-identify individuals from supposedly anonymized datasets, posing significant threats to individual privacy.

- Security: AI systems can be targets of malicious attacks, and increasingly are coming under attack from AI-powered nefarious actors. These attacks include “data poisoning,” where training data is deliberately corrupted to manipulate the AI’s behavior, and “adversarial attacks,” where subtle, often imperceptible changes to input data can cause the AI to make incorrect classifications or decisions. AI can be weaponized by malicious actors to create more sophisticated cyberattacks, automate phishing schemes, or generate convincing deepfakes for fraud.

Privacy and security risk categories can be intertwined, with issues in one area exacerbating issues in the other e.g. a security breach could lead to a catastrophic privacy violation if sensitive training data is exposed. This stresses the importance of a comprehensive, robust, integrated and intersectional approach to AI risk management.

AI Risk Monitoring and Reporting

Continuous monitoring and reporting allow for the ongoing management and adjustment to evolving AI risks, with the caveat that ongoing human oversight is required to ensure systems are working as intended, and that new, and emerging AI risks can be identified and understood before being covered by automated systems at scale.

Core AI Risk Monitoring and Reporting activities include:

- Utilize real-time analytics and anomaly detection.

- Track and monitor model drift and performance degradation.

- Automate risk status, model performance, and regulatory compliance metrics dashboard.

- Consider utilizing 3P platforms to automate risk visualization and emerging risk identification as they may have access to more industry data for early warning.

- Regular (monthly / quarterly) AI risk and compliance reviews.

- Document outcomes, incident outcomes, and risk mitigation effectiveness.

- Set up automatic alerts to react in real-time to critical situations.

- Ensure escalation paths and human intervention protocols are defined and stress-tested.

- Record data manipulations, model outputs and system actions.

- Support forensic investigations and compliance reporting.

- Ensure accessibility and analysis of user feedback reports or employee incident flags.

- Continually refine and iterate AI risk mitigation and management practices.

Responsible AI Latest Stories

Our Impact on AI Risk Management

We partner with organizations across the private and public sectors to spark the behaviors and mindset that turn change into value. Here’s some of our work in culture and change.

Supporting a Bank in enhancing AI Literacy

CHALLENGE: Banks are increasingly deploying AI-driven solutions to enhance operational efficiency, customer experience, and risk management. However, many financial institutions face challenges in cultivating AI literacy

OBJECTIVES:

- Align AI competencies with EU AI Act requirements and industry benchmarks

- Strengthen staff skills to minimise regulatory, reputational, and operational risks

APPROACH:

- Assess current literacy levels across teams

- Define clear, tailored learning objectives

- Develop e-learning modules and interactive workshops

- Consult external experts for targeted insights

- Establish continuous feedback loops for iterative improvement

KEY GAPS:

- Insufficient foundational AI understanding

- Regulatory confusion and compliance uncertainties

- Cultural inertia & reluctance to adopt new practices

- Heightened risk of biased AI outcomes

RESULTS:

- Increased staff confidence & AI proficiency

- Reduced AI-related risks through informed, compliant usage

- Stronger competitive advantage via responsible AI adoption and innovation

Enhancing Responsible AI in a Tech Firm

CHALLENGE: Augment and operationalise a Responsible AI (RAI) framework that meets regulatory requirements, reduces risk, and strengthens brand trust within a large tech firm

OBJECTIVES:

- Align with evolving regulations & tech

- Minimise legal & reputational risks

- Unlock new revenue opportunities & reinforce brand credibility

WHY IT MATTER:

- Avoid costly fines & scrutiny through proactive compliance

- Gain a competitive edge by delivering transparent, future-proof AI solutions

- Bolster stakeholder trust & facilitate sustainable innovation

RESULTS:

- Streamlined compliance processes with measurable ROI improvements

- Ongoing user confidence & market-leading AI initiative

IMPLEMENTATION SET-UP:

- Review & Map: Assess existing RAI governance against recognised standards, identifying key gaps

- Governance & Oversight: Enhance RAI principles & form a dedicated AI Ethics Board to provide strategic guidance

- Frameworks & Tools: Develop fairness & impact testing methodologies; adopt/customise bias detection toolkits

- Transparency & Risk: Establish a tiered transparency reporting process & dynamic risk-tiering approach

- Audits & Improvement: Conduct regular audits to monitor bias, performance, and compliance; feed insights back into product development

- Lifecycle Integration: Embed RAI checks throughout product lifecycles & for emerging technologies

WHO DOES IT IMPACT?

All firms looking to reduce cost

C-suite, Risk & Compliance Team

Financial Services

Technology Companies

Healthcare Companies

Governments

AI Risk Management Services we Provide

Trainings

- AI Risk Management Training

- AI Literacy Training

- Responsible AI Training

- AI Governance

- “Fact or Fiction? AI Mythbusting Training”

See more here

AI Risk Management design and implementation

or integration to existing programs

AI Risk Management Maturity Assessment

We conduct a gap analysis of an organization’s ai risk management approach against standard frameworks and regulations to assess alignment and compliance

Responsible AI Maturity Curve Mapping

AI Governance

Oversight & Accountability processes and structure design and implementation

Third Party Vendor Selection and Assessment

Third-party vendor selection and assessment

Frequently Asked Questions

Risk management is a discipline and when done well is a process and approach that requires ongoing monitoring, oversight and iteration. Although AI can already complete certain aspects of traditional risk management, specifically in the areas of risk identification, quantifications, and analysis, it is less useful in tackling, mitigating or preventing risks (though this may change in an agentic AI future when AI will not just analyze data and provide outcomes, but will also take actions).

AI can be useful at various intervention points across the AI risk management lifecycle. AI is often used in anomaly and fraud detection where it can analyse and identify patterns and trends against increasingly complex, large-scale threats. AI is less suitable for identifying and tackling emerging and new risks and emerging threats

There is not a single risk framework for AI. The AI risk frameworks most often referenced are:

- The US NIST AI Risk Management Framework (AI RMF)

- ISO 42001: Artificial Intelligence Management Systems

- the EU AI Act, and

- the OECD’s report on Advancing accountability in AI: “Governing and managing risks throughout the lifecycle for trustworthy AI” (which is an aggregation of various OECD frameworks, including the OECD AI Principles, the AI system lifecycle, the OECD framework for classifying AI systems, the OECD Due Diligence Guidance for Responsible Business Conduct as well as the ISO 31000 risk-management framework and NIST’s AI RMF).

- -The NIST AI RMF is one of the most respected and often cited ones as it can be customized and made applicable to a broad ranges of industries and use cases, even if not based in the US (disclosure: the author of these FAQs, Jen Gennai, was a contributor to a number of internationally recognized AI risk management frameworks, including the NIST AI RMF). It has 4 main sections: Map, Measure, Manage, and Govern, which can map easily to existing risk management processes and systems.

Depending on the Cloud or IT third-party you use, some AI systems come with associated AI risk frameworks which are relevant to specific systems, domains and use cases. It’s important to ensure that any risk management framework aligns and integrates well with any existing risk management procedures and systems you already follow to reduce resources, costs and time overhead.

Responsible AI is about developing, deploying, and using AI in a way that has positive impacts on individuals and society, and prevents or minimizes potential harm. Responsible AI (also interchangeably referred to as Ethical AI or Trustworthy AI) aims to ensure AI is fair, inclusive, explainable, accessible, safe, secure, privacy-protecting, accurate, robust, and fit-for-purpose.

Responsible AI is an ongoing, iterative process to ensure that AI is developed, deployed and used responsibly, and that AI can be controlled, explained, and trusted. There is not a single way or best practice to achieve responsible AI, but some common steps include:

- Defining Responsible AI principles and policies

- Adopting robust AI governance and AI risk management practices and procedures across the AI lifecycle

- Ensuring responsible AI is a shared responsibility across an organization, with relevant and expert-informed training and change management

- Assigning appropriate roles, responsibilities and accountability to relevant stakeholders

- Take a humble, proactive, risk-based and context-dependent, ongoing approach to responsible AI which reduces costs in the long-run and helps you get ahead of issues.

The key pillars of responsible AI are: fairness, reliability and accuracy, safety, privacy and security, transparency and explainability, sustainability, and governance and accountability.

Expanding on each of these:

- Fairness : ensuring that AI is fair and does not disproportionately cause negative outcomes and harms to certain subgroups, just because of their identity or other demographic factors

- reliability and accuracy: ensuring that AI provides outcomes that are reliable, robust, accurate, appropriate and useful

- Safety: ensuring that AI does not cause physical, emotional, mental, economic, financial, educational or other harm.

- privacy and security : ensuring that the data of, from, and about people, organizations, nations etc. are private, safe, and secure from nefarious, illegitimate or excessive access or use.

- transparency and explainability : ensuring AI systems can be understood, debugged, contested, controlled and accountable to human oversight

- Sustainability: ensuring AI contributes to a greener, more sustainable planet and does not cause harm to individuals’, societies, ecosystem or the world’s health and flourishing.

- governance and accountability : ensuring AI is appropriately governed, with appropriate human oversight and accountability, all across the AI lifecycle.

Some of the most often-discussed ethical concerns related to AI are:

- Discrimination and exclusion of certain people

- Job disruption and displacement

- The “loss of truth” due to misinformation, disinformation, hallucinations etc

- Loss of human control and autonomy

- Privacy and security issues

- Non-consensual sexual imagery of both adults and children

- Anthropomorphization and loss of human connection

- Unjustified and unexplainable outcomes, decisions or actions

- Environmental impacts (specifically the over-consumption of water, energy, and rare minerals)

AI bias refers to when outcomes, decisions, or impacts disproportionately affect some groups or beliefs more than others. Although bias can be both positive (e.g. personalization of online content towards your preferences could be defined as a positive bias towards your own needs and wants) and negative, the focus of AI bias is generally on unfair bias due to inadequacies in the data and model which lead to discriminatory and negative outcomes on certain subgroups of people.

AI governance encompasses the policies, processes, practices, and procedures that guide the development, deployment, and operation of AI to minimize potential harms and mitigate risks while maximizing its benefits. Some AI governance best practices include defining Responsible AI principles and policies, establishing or integrating robust risk management processes across the AI lifecycle, creating and scaling organizational governance structures with clear roles, responsibilities, and accountability mechanisms, designing and adopting training and culture change programs, and implementing monitoring and evaluation procedures.

- Policies & Principles

- Set, communicate and enforce clear acceptable use policies

- Include language in partner or vendor contracts that establishes responsible AI expectations.

- UX & User Controls

- Develop user-controls (e.g. engagement settings)

- Inform users when they are engaging with GenAI (eg as chatbots or AI generated content which could be confused for being created by humans)

- Offer alternative, nonalgorithmic options

- Human Oversight

- Conduct regular impact assessments

- Establish expert oversight mechanisms (eg external advisory boards, user feedback mechanisms, bug bounties)

- Establish appeal and/or contestability processes

- Implement a rapid-response escalation management process

- Explainability/ Transparency

- Implement clear risk disclaimers

- Offer educational resources on digital literacy

- Provide transparency artifacts (eg datasheets, model cards, transparency reports, system card)

- Fairness

- Develop diverse representation in AI models

- Develop diverse and inclusive training datasets

- Conduct regular fairness audits

- Test to ensure outcomes are fair or within acceptable ranges across sub-groups

- Safety

- Implement ongoing safety evaluations and content filtering

- Implement strict age verification processes

- Develop age-appropriate content filters

- Implement transparent evaluation criteria

The future of AI is in our hands.

Tim Cook, CEO of Apple

Want to hire

AI Risk Management Expert?

Book a call with our experts

Contact