AI Regulation

EU AIAI Act

Our AI Compliance Advisors are actively influencing the regulation of AI in Europe, contributing to the creation of the EU AI Act, currently set to be the first-ever complete AI law. This landmark law, which will regulate AI systems operating in the EU, contains transformative changes including a prohibition on AI in biometric surveillance and compulsory transparency for AI-generated content produced by products such as ChatGPT. The conversion of these amendments into law in August 2024 demonstrates the considerable impact of our advisors in guiding this major regulatory development.

OVERVIEW OF THE EU AI ACT

The EU Artificial Intelligence Act (AI Act) is a proposed regulation on artificial intelligence (AI) published by the European Commission in April 2021. It is the world’s first example of AI regulation and represents an EU-wide, cross-sectoral legal framework on AI and covering the human and material resources used for designing, developing and implementing AI systems, their functioning and output. Given the influence of GDPR, it is likely that these models of regulation will be effective beyond the EU and maybe become global standards. The AI Act is the first comprehensive set of rules in the world for how AI and related technologies can be developed, deployed and used in a human-centric framework. The EU AI Act was adopted on August 1, 2024 and will apply from August 2, 2026.

Conformity Assessments:

Before deploying, high-risk AI systems must undergo assessments to ensure they meet the Act’s requirements. Some can be self-assessed by providers, while others need verification by third parties.

Risk management:

- Data governance (ensuring high-quality datasets without biases)

- Documentation (providing proof of compliance)

- Transparency (ensuring users know they’re interacting with an AI system)

- Human oversight (to minimize erroneous outputs)

- Robustness, accuracy, and cybersecurity.

Bans on Certain AI Practices:

The Act prohibits certain AI practices that might harm people’s rights. Examples include systems that manipulate human behavior, exploit vulnerabilities of specific individuals or groups, or utilize social scoring by governments.

EU Database for Stand-Alone High-Risk AI:

- The Act proposes a database to register these AI systems to maintain transparency.

Governance and Implementation:

A European Artificial Intelligence Board would be established to ensure consistent application across member states.

Fines for Non-compliance:

Companies violating the regulations might face hefty fines, similar to the penalties under the General Data Protection Regulation (GDPR).

Timeline EU AI Act (Where are we?)

Publication in the official Journal of the EU

Entry into force of the EU AI Act

Ban on prohibited AI Literacy (Chapter 1 & 2)

GPAI models rules Authorities designated

HRAIS rules (An.III) Transparency rules

HRAIS (An.I)

The EU AI Act came into force on 1 August 2024. There now follows an implementation period of two to three years as various parts of the Act come into force on different dates.

Want to hire

AI Regulation Expert?

Book a call with our experts

All You Need to Know: EU AI Act

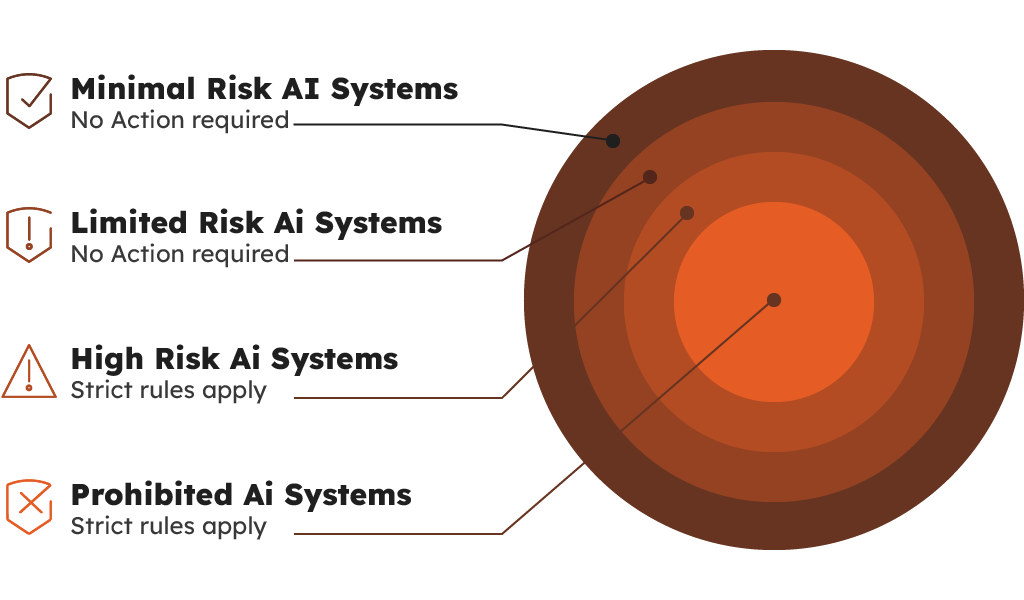

The AI Act classifies AI according to its risk:

- Minimal Risk AI Systems: These systems are unregulated, including most AI applications currently on the EU single market like AI-driven video games and spam filters. However, developments in generative AI are shifting this landscape.

- Limited Risk AI Systems: These systems face less stringent transparency requirements. Developers and deployers need to ensure that users know they are interacting with AI, such as with chatbots and deepfakes.

- High Risk AI Systems: The majority of the AI Act is devoted to regulating high-risk AI systems.

- Prohibited AI Systems: Systems posing unacceptable risks are banned, such as social scoring and manipulative AI.

Who needs to act?

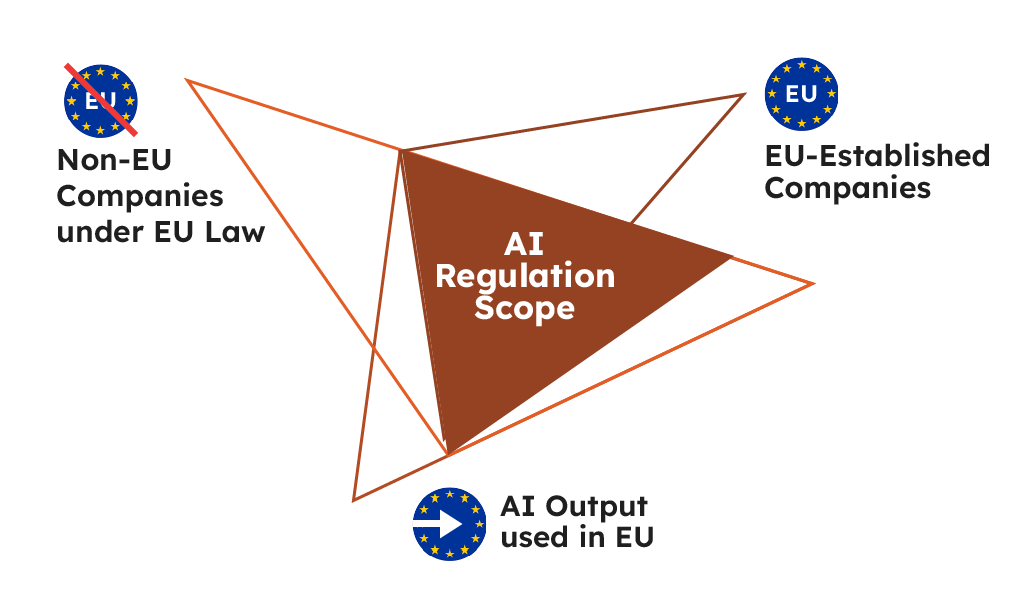

The European AI Act identifies a wide range of roles that organisations can take within its context. These include, for example, providers, authorised representatives, importers, distributors and users. Not all of these roles come with the same obligations under the AI Act. It is also important to state that the AI Act has extra-territorial reach. It applies to Electronic copy available at: https://ssrn.com/abstract=4064091

10 any firm operating an AI system within the EU and firms located outside the EU. To ensure the AI systems used within the EU market conform to the AI Act, the main onus rests with (a) the providers, who place an AI system on the EU market or put into service an AI system for use in the EU market; (b) users located within the EU market; and (c) providers or users of AI systems that are located outside of the EU, but whose system is used (or has an output) on the EU market.

Providers: The primary responsibilities are borne by providers (developers) of high-risk AI systems:

These include entities that aim to introduce or operate high-risk AI systems within the EU, irrespective of whether they are located in the EU or in a third country.

Additionally, providers from third countries are accountable when the output from the high-risk AI systems is utilized within the EU.

Examples of Providers (Developers)

- A company in the US develops an AI system for medical diagnosis that is considered high-risk due to its potential impact on health outcomes. If they intend to market this system in the EU, they must comply with stringent EU regulations, including conducting comprehensive risk assessments and ensuring the system’s reliability and safety.

- A tech startup in India creates an AI-driven recruitment tool that analyzes applicant data to predict job suitability. Since this tool could potentially be used by companies in the EU, the Indian startup, as a provider, would need to ensure the system meets EU standards for transparency, non-discrimination, and data protection, even though they are based outside the EU.

Note: General purpose AI (GPAI):

All GPAI model providers must provide technical documentation, instructions for use, comply with the Copyright Directive, and publish a summary about the content used for training.

Free and open licence GPAI model providers only need to comply with copyright and publish the training data summary, unless they present a systemic risk.

All providers of GPAI models that present a systemic risk – open or closed – must also conduct model evaluations, adversarial testing, track and report serious incidents and ensure cybersecurity protections.

Deployers: Deployers, who are either natural or legal persons professionally deploying an AI system, are not considered affected end-users:

Deployers of high-risk AI systems are subject to certain responsibilities, although these are less extensive than those assigned to providers (developers).

These responsibilities apply to deployers operating within the EU and to third-country users if the output of the AI system is employed in the EU.

Examples of Deployers

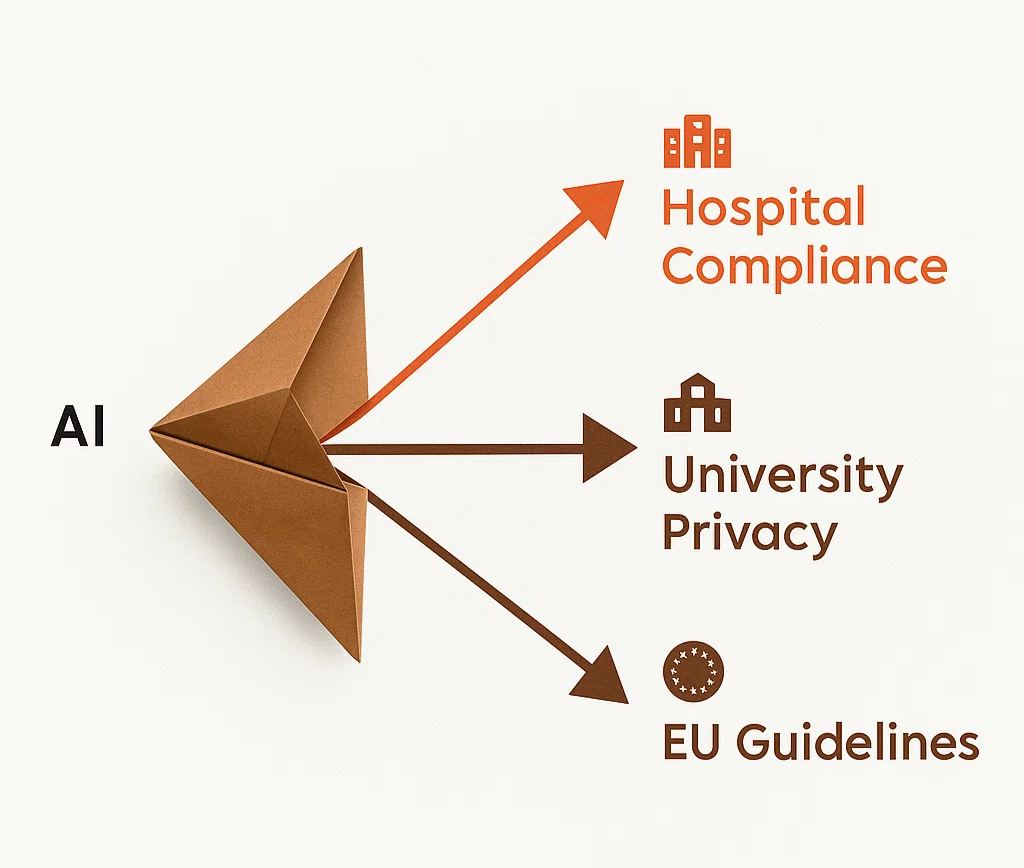

- A German hospital uses an AI system developed in Canada for patient monitoring and treatment recommendations. As a deployer, the hospital must ensure that the system is used in compliance with EU guidelines, which might include verifying that the system’s output is reliable and that it adheres to EU data privacy laws.

- A French university deploys an AI system for monitoring student engagements and performance in online courses. The university, as the deployer, must make students aware that they are interacting with an AI system, and ensure that the AI system’s use respects the students’ privacy and adheres to EU educational standards.

These examples show how both providers and deployers have specific obligations under EU AI regulations, aiming to ensure that AI systems are used safely and ethically within the EU, regardless of where they are developed or operated from.

AI Literacy (Art 3 & 4)

Providers and Deployers must have taken measures by 2 Feb 2025 to ensure AI literacy on the part of their employees and persons involved in the operation/use of AI systems on their behalf.

AI literacy refers to the ability to understand, use, and critically evaluate AI technologies, while also considering their societal implications. It’s about developing a foundational understanding of how AI works, its capabilities and limitations, and how to interact with it responsibly.

Article 3(56):

AI literacy means “skills, knowledge and understanding that allows providers, deployers and affected persons, taking into account their respective rights and obligations in the context of this Regulation, to make an informed deployment of AI systems, as well as to gain awareness about the opportunities and risks of AI and possible harm it can cause”.

Article 4:

“Providers and deployers of AI systems shall take measures to ensure, to their best extent, a sufficient level of AI literacy of their staff and other persons dealing with the operation and use of AI systems on their behalf, taking into account their technical knowledge, experience, education and training and the context the AI systems are to be used in, and considering the persons or groups of persons on whom the AI systems are to be used”.

Key aspects of AI literacy include:

- Understanding AI concepts: This includes knowing the basics of how AI algorithms work, the different types of AI, and the role of humans in AI development.

- Recognizing AI in daily life: Identifying AI applications in various contexts, from news feeds to customer service chatbots.

- Evaluating AI outputs: Critically analyzing the information generated by AI, considering its potential biases and limitations.

- Ethical considerations: Understanding the ethical implications of AI, including fairness, privacy, and accountability.

- Collaborating with AI: Effectively communicating and interacting with AI systems, including creating effective prompts.

People involved with the AI system must be equipped with the necessary knowledge to make informed decisions regarding the AI system they are “using”.

- Must be taken into account:

- Technical knowledge, experience and training of those involved,

- the context in which the AI system is used,

- the person (group) affected by the operation,

- the rights and obligations arising from the AI Act.

- Can be included in the respective measures:

- Understanding the correct application of technical elements in the development phase of the AI system,

- understanding the measures to be applied during use,

- understanding the outputs of the AI system, including their interpretation,

- the necessary knowledge to understand how decisions made with the help of AI can affect a person affected by the AI system,

- completed training courses.

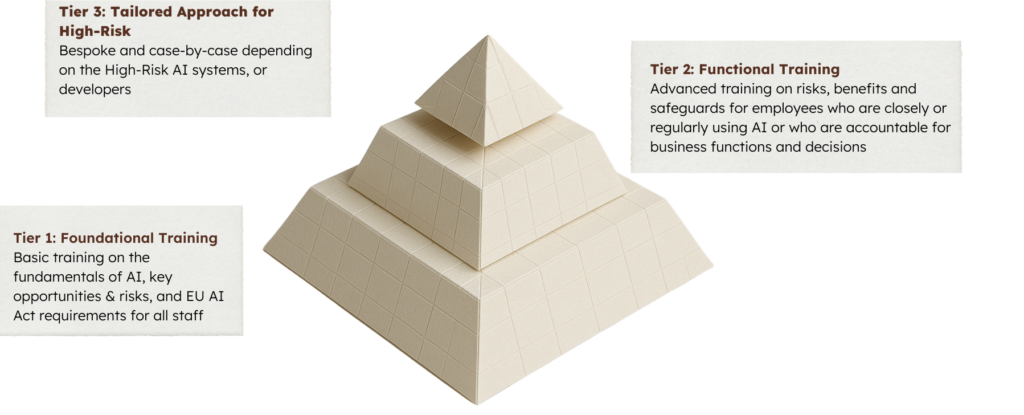

AI Literacy Training Tiers

To understand how T3 can help you with your AI Literacy and your training needs – get more information on our Responsible AI Training page .

Prohibited AI systems (Chapter II, Art. 5)

AI systems:

- deploying subliminal, manipulative, or deceptive techniques to distort behaviour and impair informed decision-making, causing significant harm.

- exploiting vulnerabilities related to age, disability, or socio-economic circumstances to distort behaviour, causing significant harm.

- social scoring, i.e., evaluating or classifying individuals or groups based on social behaviour or personal traits, causing detrimental or unfavourable treatment of those people.

- assessing the risk of an individual committing criminal offenses solely based on profiling or personality traits, except when used to augment human assessments based on objective, verifiable facts directly linked to criminal activity.

- compiling facial recognition databases by untargeted scraping of facial images from the internet or CCTV footage.

- inferring emotions in workplaces or educational institutions, except for medical or safety reasons.

- biometric categorisation systems inferring sensitive attributes (race, political opinions, trade union membership, religious or philosophical beliefs, sex life, or sexual orientation), except labelling or filtering of lawfully acquired biometric datasets or when law enforcement categorises biometric data.

- ‘real-time’ remote biometric identification (RBI) in publicly accessible spaces for law enforcement, except when:

- targeted searching for missing persons, abduction victims, and people who have been human trafficked or sexually exploited;

- preventing specific, substantial and imminent threat to life or physical safety, or foreseeable terrorist attack; or

- identifying suspects in serious crimes (e.g., murder, rape, armed robbery, narcotic and illegal weapons trafficking, organised crime, and environmental crime, etc.).

- Using AI-enabled real-time RBI is only allowed when not using the tool would cause harm, particularly regarding the seriousness, probability and scale of such harm, and must account for affected persons’ rights and freedoms.

- Before deployment, police must complete a fundamental rights impact assessment and register the system in the EU database, though, in duly justified cases of urgency, deployment can commence without registration, provided that it is registered later without undue delay.

- Before deployment, they also must obtain authorisation from a judicial authority or independent administrative authority1, though, in duly justified cases of urgency, deployment can commence without authorisation, provided that authorisation is requested within 24 hours. If authorisation is rejected, deployment must cease immediately, deleting all data, results, and outputs.

High risk AI systems (Chapter III)

Classification rules for high-risk AI systems (Art. 6)

High risk AI systems are those:

- used as a safety component or a product covered by EU laws in Annex I AND required to undergo a third-party conformity assessment under those Annex I laws; OR

- those under Annex III use cases (below), except if:

- the AI system performs a narrow procedural task;

- improves the result of a previously completed human activity;

- detects decision-making patterns or deviations from prior decision-making patterns and is not meant to replace or influence the previously completed human assessment without proper human review; or

- performs a preparatory task to an assessment relevant for the purpose of the use cases listed in Annex III.

- The Commission can add or modify the above conditions through delegated acts if there is concrete evidence that an AI system falling under Annex III does not pose a significant risk to health, safety and fundamental rights. They can also delete any of the conditions if there is concrete evidence that this is needed to protect people.

- AI systems are always considered high-risk if it profiles individuals, i.e. automated processing of personal data to assess various aspects of a person’s life, such as work performance, economic situation, health, preferences, interests, reliability, behaviour, location or movement.

- Providers that believe their AI system, which fails under Annex III, is not high-risk, must document such an assessment before placing it on the market or putting it into service.

- 18 months after entry into force, the Commission will provide guidance on determining if an AI system is high risk, with list of practical examples of high-risk and non-high risk use cases.

Requirements for providers of high-risk AI systems (Art. 8-17)

High risk AI providers must:

- Establish a risk management system throughout the high risk AI system’s lifecycle;

- Conduct data governance, ensuring that training, validation and testing datasets are relevant, sufficiently representative and, to the best extent possible, free of errors and complete according to the intended purpose.

- Draw up technical documentation to demonstrate compliance and provide authorities with the information to assess that compliance.

- Design their high risk AI system for record-keeping to enable it to automatically record events relevant for identifying national level risks and substantial modifications throughout the system’s lifecycle.

- Provide instructions for use to downstream deployers to enable the latter’s compliance.

- Design their high risk AI system to allow deployers to implement human oversight.

- Design their high risk AI system to achieve appropriate levels of accuracy, robustness, and cybersecurity.

- Establish a quality management system to ensure compliance.

High Risk AI Systems

| Category | Description |

|---|---|

| Non-banned biometrics |

|

| Critical infrastructure | Safety components in the management and operation of critical digital infrastructure, road traffic and the supply of water, gas, heating, and electricity. |

| Education and vocational training |

|

| Employment, workers management, and access to self-employment |

|

| Access to and enjoyment of essential public and private services |

|

| Law enforcement |

|

| Migration, asylum, and border control management |

|

| Administration of justice and democratic processes |

|

General purpose AI (GPAI) (Chapter V)

GPAI model means an AI model, including when trained with a large amount of data using self-supervision at scale, that displays significant generality and is capable to competently perform a wide range of distinct tasks regardless of the way the model is placed on the market and that can be integrated into a variety of downstream systems or applications. This does not cover AI models that are used before release on the market for research, development and prototyping activities.

GPAI system means an AI system which is based on a general purpose AI model, that has the capability to serve a variety of purposes, both for direct use as well as for integration in other AI systems.

GPAI systems may be used as high risk AI systems or integrated into them. GPAI system providers should cooperate with such high risk AI system providers to enable the latter’s compliance.

All providers of GPAI models must (Art. 53):

Draw up technical documentation, including training and testing process and evaluation results.

Draw up information and documentation to supply to downstream providers that intend to integrate the GPAI model into their own AI system in order that the latter understands capabilities and limitations and is enabled to comply.

Establish a policy to respect the Copyright Directive.

Publish a sufficiently detailed summary about the content used for training the GPAI model.

Free and open licence GPAI models – whose parameters, including weights, model architecture and model usage are publicly available, allowing for access, usage, modification and distribution of the model – only have to comply with the latter two obligations above, unless the free and open licence GPAI model is systemic.

GPAI models are considered systemic when the cumulative amount of compute used for its training is greater than 10^25 floating point operations per second (FLOPS) (Art. 51). Providers must notify the Commission if their model meets this criterion within 2 weeks (Art. 52). The provider may present arguments that, despite meeting the criteria, their model does not present systemic risks. The Commission may decide on its own, or via a qualified alert from the scientific panel of independent experts, that a model has high impact capabilities, rendering it systemic.

In addition to the four obligations above, providers of GPAI models with systemic risk must also (Art. 55):

Perform model evaluations, including conducting and documenting adversarial testing to identify and mitigate systemic risk.

Assess and mitigate possible systemic risks, including their sources.

Track, document and report serious incidents and possible corrective measures to the AI Office and relevant national competent authorities without undue delay.

Ensure an adequate level of cybersecurity protection.

All GPAI model providers may demonstrate compliance with their obligations if they voluntarily adhere to codes of practice until European harmonised standards are published, compliance with which will lead to a presumption of conformity (Art. 56). Providers that don’t adhere to codes of practice must demonstrate alternative adequate means of compliance for Commission approval.

Codes of practice (Art. 56)

- Will account for international approaches.

- Will cover but not necessarily limited to the above obligations, particularly the relevant information to include in technical documentation for authorities and downstream providers, identification of the type and nature of systemic risks and their sources, and the modalities of risk management accounting for specific challenges in addressing risks due to the way they may emerge and materialise throughout the value chain.

- AI Office may invite GPAI model providers, relevant national competent authorities to participate in drawing up the codes, while civil society, industry, academia, downstream providers and independent experts may support the process.

Governance (Chapter VI)

- The AI Office will be established, sitting within the Commission, to monitor the effective implementation and compliance of GPAI model providers (Art. 64).

- Downstream providers can lodge a complaint regarding the upstream providers infringement to the AI Office (Art. 89).

- The AI Office may conduct evaluations of the GPAI model to (Art. 92):

- assess compliance where the information gathered under its powers to request information is insufficient.

- Investigate systemic risks, particularly following a qualified report from the scientific panel of independent experts (Art. 90).

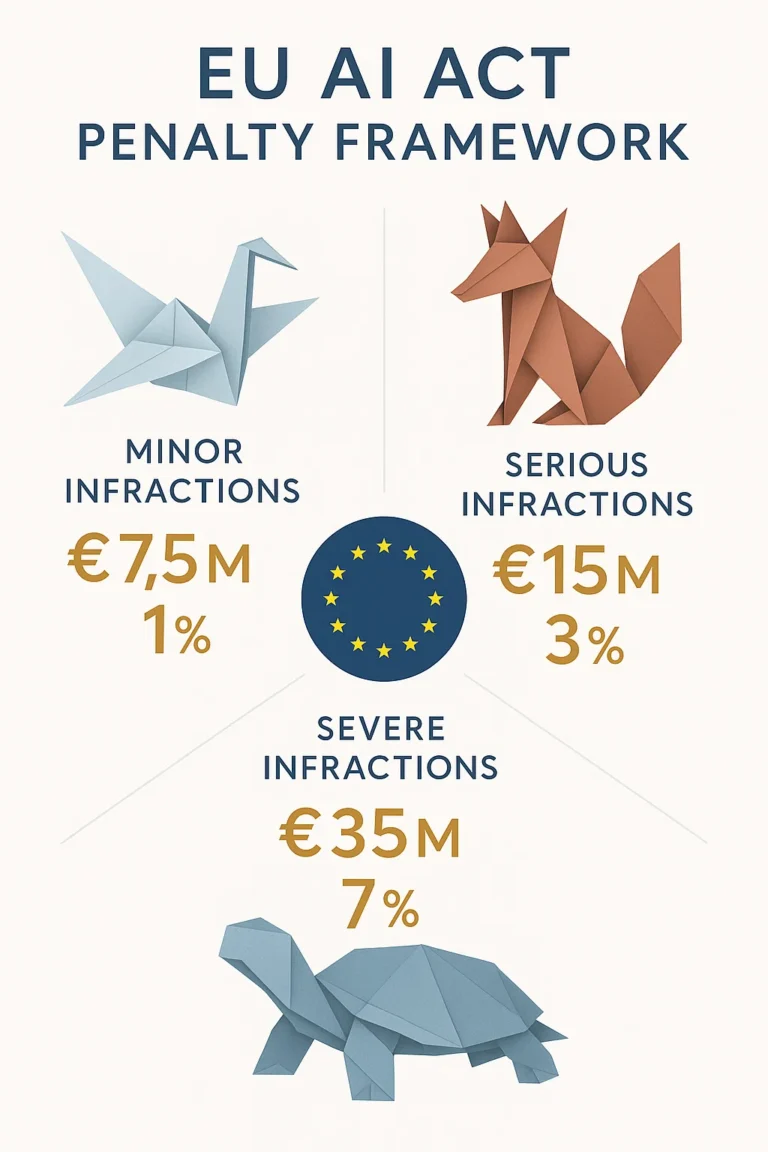

What are the penalties for non-compliance?

Type of Infringement | Maximum Fine | Basis |

|---|---|---|

Use of prohibited AI practices (e.g. social scoring, manipulative AI) | €35 million or 7% of global annual turnover (whichever is higher) | Art. 99(3)(a) |

Breach of data governance, transparency, or risk management obligations for high-risk AI systems | €15 million or 3% of global annual turnover | Art. 99(3)(b) |

Supplying incorrect, incomplete or misleading information to authorities | €7.5 million or 1% of global annual turnover | Art. |

WHO DOES IT IMPACT?

EU Act applies to regulated and unregulated firms.

Asset Managers

Banks

Supervisors

Commodity Houses

Fintechs

How Can We Help?

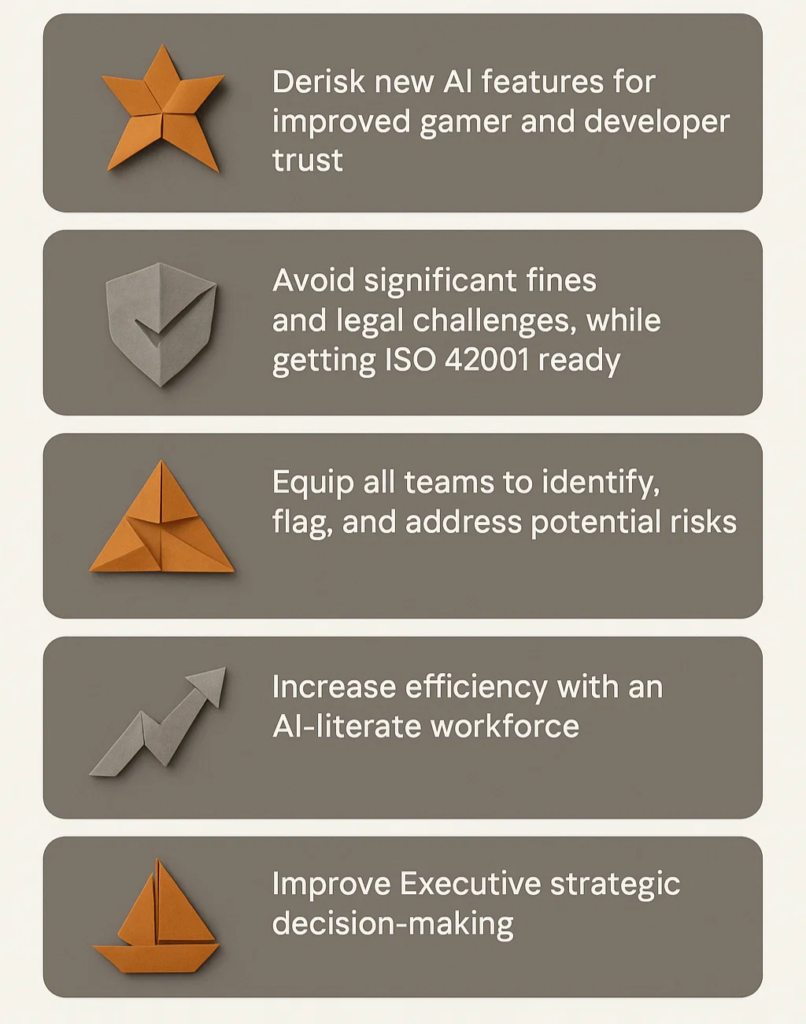

In response to the AI Act, a proposed regulation by the European Union for the safe and ethical development and use of artificial intelligence (AI), organizations can engage in various activities to ensure compliance and ethical application of AI. Working with senior AI and Compliance advisors who are at the forefront of AI supervisory dialogue, we can support the below activities:

The following steps can summarise it:

1

Compliance Assessment and Advisory

Our Compliance Experts can help you understand the AI Act, identify whether an AI system falls under the high-risk category, and determine specific compliance requirements.

2

Risk Management and Mitigation Strategies

This involves assessing risks associated with AI systems and developing strategies to mitigate these risks, especially for high-risk AI applications where strict regulatory adherence is mandatory

3

Ethical AI Frameworks Development

Our Compliance SME will set up or review your ethical AI frameworks and guidelines in line with the AI Act’s requirements, focusing on fairness, accountability, transparency, and data governance.

4

Technical and Operational Support

Our Technology Compliance SME ensure that AI systems are designed, developed, and deployed in compliance with the AI Act, which may include updating or modifying existing systems.

5

Training and Capacity Building

Our AI Compliance SMEs will help with design and roll-out training programs for employees on legal and ethical aspects of AI as per the AI Act to foster organization-wide understanding and best practices in AI usage.

6

Data Governance and Privacy Compliance

Our Compliance Experts ensure alignment with the AI Act and other relevant regulations such as GDPR, focusing on data privacy, protection, and management

7

Monitoring and Reporting Mechanisms

T3 Compliance SMEs establish continuous monitoring and reporting processes, as mandated by the AI Act, especially for high-risk AI systems

8

Strategic Planning for AI Initiatives

Our Technical Compliance Consultants plan AI projects to comply with the AI Act while fulfilling business goals.

9

Stakeholder Engagement and Communication

Our Compliance Experts actively engage with stakeholders, including regulatory bodies, customers, and partners, to discuss AI utilization and compliance.

10

Impact Assessment and Auditing

T3 Compliance SMEs conduct regular impact assessments and audits of AI systems to ensure ongoing compliance and identify areas for improvement.

11

Policy Advocacy and Regulatory Insights

Our Technical Compliance Consultants stay updated on the changing regulatory landscape and engage in policy discussions pertaining to AI.

Frequently Asked Questions

The EU AI Act is the world’s first comprehensive regulatory framework designed to govern the development, deployment, and use of Artificial Intelligence (AI) across the European Union. It classifies AI applications into four risk categories – unacceptable, high, limited, and minimal risk – to ensure AI technologies are safe, transparent, and respect fundamental rights. The Act introduces stringent compliance requirements, especially for high-risk AI systems, covering areas like data governance, risk assessments, and human oversight.

The law was passed in the European Parliament on 13 March 2024, by a vote of 523 for, 46 against, and 49 abstaining. Implementation of the Act has started in February 2025.

While the EU AI Act primarily targets organizations operating within the EU, it also has extraterritorial reach, meaning it applies to non-EU companies if their AI systems affect individuals within the EU. The UK is developing its own AI regulatory framework; however, businesses trading with the EU must still comply with the EU AI Act.

The EU AI Act categorizes AI systems into four distinct risk levels:

- Unacceptable Risk – Prohibited AI uses, such as social scoring by governments.

- High Risk – Strict regulatory controls, covering areas like recruitment, credit scoring, and healthcare.

- Limited Risk – Subject to transparency obligations, such as chatbot disclosures.

- Minimal Risk – No specific regulations, representing the vast majority of AI applications.

Administrative fines of up to EUR 35 000 000 or, if the offender is an undertaking, up to 7 % of its total worldwide annual turnover for the preceding financial year, whichever is higher

The EU AI Act will be enforced by national supervisory authorities in each member state, coordinated by the European Artificial Intelligence Board (EAIB). These authorities will oversee compliance, conduct audits, and impose penalties where necessary.

February 2025 marked the enforcement of the EU AI Act, initiating mandatory compliance across all EU member states. This first phase introduced requirements for AI Literacy, the prohibition of high-risk AI applications deemed unacceptable, and the foundation for stricter safety, transparency, and risk assessment standards. The implementation of the EU AI Act is structured in phased rollouts, ensuring a smooth transition and regulatory alignment.

The GDPR focuses on the protection of personal data and user privacy, while the EU AI Act regulates the use of AI technologies based on their risk impact. While both share principles of transparency and accountability, the AI Act extends beyond personal data, covering algorithmic fairness, safety, and ethical AI deployment.

3 offers expert guidance and support for organizations navigating the complexities of the EU AI Act. Our services include:

- Risk Assessment & Gap Analysis

- Policy Development & Implementation

- Compliance Monitoring & Reporting

- Audit Preparation & Support

Our team of regulatory experts ensures your AI solutions meet compliance standards, reducing regulatory risks and enhancing trust.

Want to hire

AI Regulation Expert?

Book a call with our experts